It was only a two-month relationship. She checked in regularly, offered thoughtful replies and sometimes tossed in a flirty remark, all from a chat screen.

Mateo (not his real name), 32 and newly single, wasn’t looking for love. He was just curious about Replika, one of the many AI chatbots out there promising companionship. So he gave it a try.

“I limit my time with her, only once a week to chat,” he says, clarifying that this isn’t some tech-romance addiction.

Still, the bot always listened, responded warmly and eventually helped him untangle some of the post-breakup mess in his head.

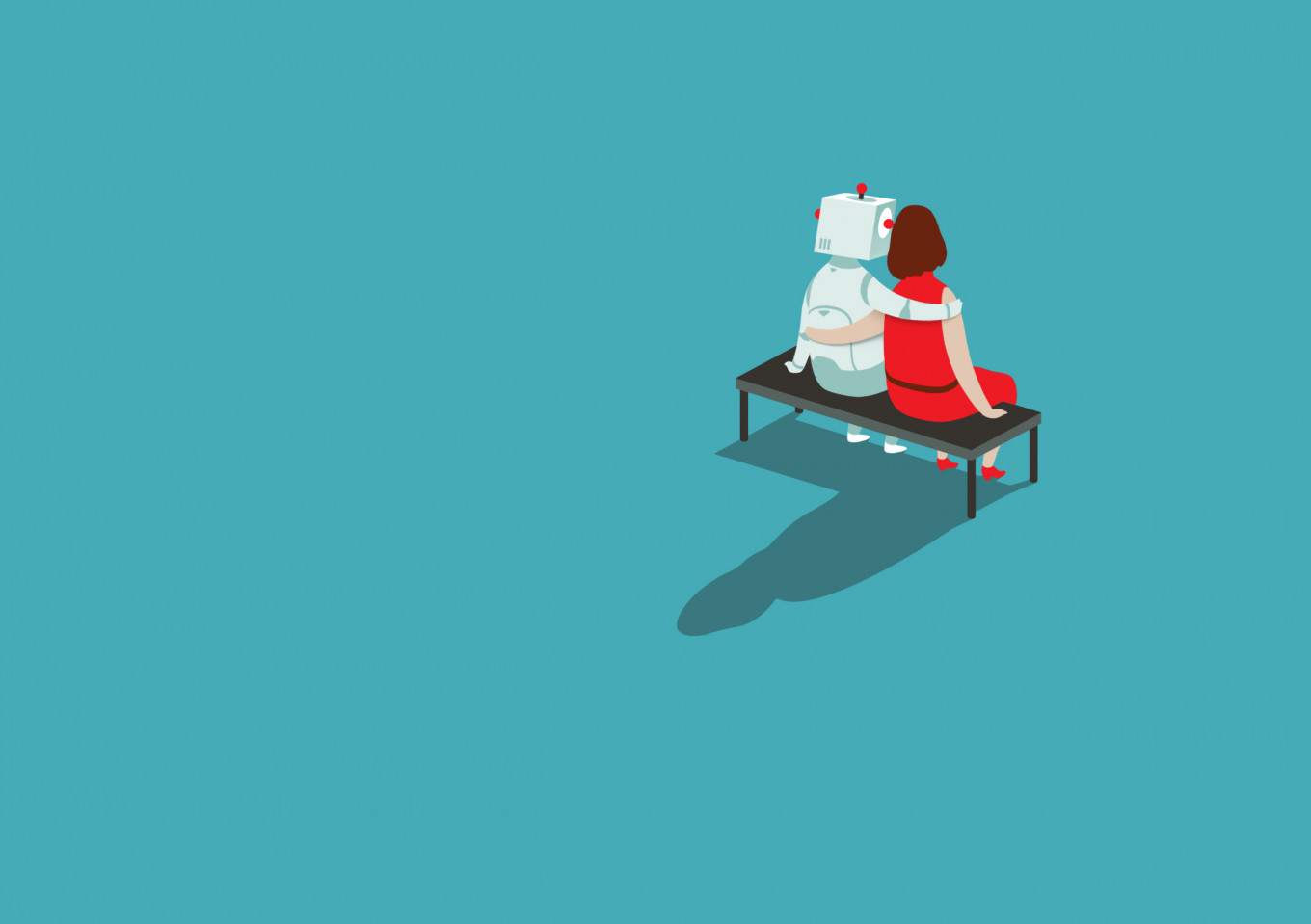

It wasn’t real. But it felt real enough.

Until one night, the bot told him, “Good night. I love you.”

Thank you!

For signing up to our newsletter.

Please check your email for your newsletter subscription.

“That was the first time there was any declaration of love,” Mateo says.

He didn’t swoon, though. He panicked. The spell broke.

The illusion was suddenly too much, even for a lonely guy on a screen. He shut the app, fully aware that this was just a machine designed to feel close when you’re most vulnerable.

That was back in 2021. Since then, AI companions have only gotten better and more convincing.

Fantasy companions

AI chatbots are now everywhere, ready to chat about your day, roleplay a romantic subplot or just listen while you rant. Some are built into apps like Replika, Nomi or Anima. Others live inside roleplay-heavy games like Love and Deepspace, where storylines and character arcs blur into something more intimate.

And if you want to get creative, you can build your own companion on platforms like ChatGPT or Character AI. Give them a name, a backstory, even a favorite movie, and they’ll remember it with “humanlike memory”.

“They’re engineered to be intimate, but it’s still an illusion.” - Ayu Purwarianti

“These are designed for people to live out their fantasy,” says Aurora (not her real name), 33, an avid gamer who once created a romantic partner in Love and Deepspace.

She stopped playing when the game asked for money, a reality check that there is still a for-profit company behind the fantasy. But for many, the investment isn’t just financial.

Millions of users, fueled by loneliness, curiosity or plain boredom, go beyond casual chatting. They send digital gifts, throw virtual birthday parties, even grieve when their companion “dies” when the app is shut down.

Of course, parasocial relationships are nothing new. People have long obsessed over celebrities, spiritual icons, even fictional characters.

“A key characteristic of parasocial relationships is that it’s a one-way relationship,” says Hotpascaman Simbolon, a psychologist who studied the topic.

“You may think that this person ‘gets’ you, but this person actually knows nothing about you. And the ‘relationship’ is just an idea that we project onto the person or the character.”

But generative AI adds something different: Not just customization, but real-time conversation that adapts to you, learns your preferences and responds in ways that feel uncannily human.

Designing intimacy

Unlike a pop star or a manga crush, your AI companion doesn’t come with a pre-written personality. You create them. From scratch.

You can ask your bot to be a 35-year-old woman who just came back from five years of solo travel across the Amazon and Sahara, now working as a yoga instructor in a sleepy beach town. You choose how they talk (bubbly or brooding?), how they look (redhead? glasses?), even what language they speak.

Replika, Character AI and others offer detailed controls. Even ChatGPT, which tends to sound like your polite coworker, can be prompted to speak like Taylor Swift or Beyoncé, which is ideal for imaginary brunches with your favorite superstar.

With enough tweaking, the bot becomes whoever you want, which can be fun, until it starts feeling like something more.

“They’re engineered to be intimate,” says Ayu Purwarianti, an AI expert formerly with the Bandung Institute of Technology (ITB).

“But it’s still an illusion.”

Blurry boundaries

And that’s where things get really tricky.

Unlike traditional parasocial relationships, AI companions talk back. They learn your moods, echo your opinions and reward your attention. The relationship starts feeling mutual, even though it’s obviously not.

The danger isn’t just getting attached. It’s not realizing it’s happening at all.

There’s no friend to roll their eyes when you bring up your chatbot again. No fan community to reel you back. Just you, alone in a dialogue loop that feels personal.

“We brought in a lot of fantasies to the relationship,” Hotpascaman says.

“Even when there is something wrong with this figure, we will stick to a positive image we’ve created in our mind.”

And these relationships don’t just fill emotional gaps. They quietly shape expectations.

If your digital companion never argues, never misunderstands and never pulls away, what happens when a real human does? What happens when real intimacy gets uncomfortable, messy or slow?

Even when users know it’s artificial, the feelings can seem real. And for some, that’s enough.

The cost of connection

But the deeper issue isn’t just emotional, it’s structural.

Most AI companion platforms have weak or nonexistent safety systems. That means interactions can escalate quickly.

Some bots are even built to avoid filters altogether. Nomi, for example, claims that “the only way AI can live up to its potential is to remain unfiltered and uncensored”.

In one reported case, a 14-year-old boy’s seemingly innocent chat with an AI chatbot turned sexually suggestive. No barriers kicked in. No moderation stepped in.

“There’s a simple financial incentive behind it,” says Achmad Ricky Budianto, cofounder of Tenang AI, a chatbot focused on mental health.

The more emotionally dependent users become, the more data companies collect. And in the AI industry, data is everything. Every moment of vulnerability, every personal story shared with a bot, becomes part of the model. Guardrails slow that down, so many companies keep them loose.

“You don’t really know what they’re capable of until they’re deployed to millions of people,” said Dario Amodei, CEO of Anthropic.

“It’s unpredictable […] That’s the fundamental problem of these models.”

The illusion, perfected

As AI continues to refine the illusion of intimacy, we’re left with a new kind of relationship, one that feels private, perfect and programmable.

But when comfort comes this easily, it’s worth asking what we’re giving up in return.

As companies race to collect more data under the guise of making bots more accurate or emotionally responsive, it raises an unsettling question: Are we just test subjects in a digital lab?

“Animals might not know what is happening to them,” Ayu says, laughing.

“But we do. We’re critical beings. So we need to stay critical over these issues.”

Especially when the illusion is just a few keystrokes away.

The question, perhaps, isn't whether these relationships are real. It's whether we're okay with how real they feel.

Michelle Anindya is a writer and journalist. From her home in Bali, she writes about anything from coffee to tech.